Since the end of World War II, most American college students have attended schools in cities and metropolitan areas. Mirroring the rapid urbanization of the United States in the late 19th and early 20th centuries, this trend reflects the democratization of college access and the enormous growth in the numbers of commuter students who live at home while attending college.

Going to college in the city seems so normal now that it’s difficult to comprehend that it once represented a radical shift not only in the location of universities, but also in their ideals.

From the founding of Harvard in 1636 onward, college leaders held a negative view of cities in general, and a deep-seated belief that cities were ill-suited to educating young men and women. In 1883, Charles F. Thwing, a minister with strong interest in higher education, wrote that a significant number of city-bred students “are immoral on their entering college” because city environments have “for many of them been excellent preparatory schools for Sophomoric dissipation.” “Even home influences,” he wrote, “have failed to outweigh the evil attractions of the gambling table and its accessories.”

In the late 19th and early 20th centuries, higher education leaders believed that the purpose of a college education, first and foremost, was to build character in young people—and that one could not build character in a city. When Woodrow Wilson was president of Princeton University, he wrote that college must promote “liberal culture” in a “compact and homogenous” residential campus, explaining that “you cannot go to college on a streetcar and know what college means.” The danger of cities was so self-evident that even the president of the City College of New York, Frederick Robinson, lamented to a 1928 conference of urban university leaders that commuter students “do not enter into a student life dominated night and day by fellow students” and therefore “miss the advantages of spiritual transplanting.”

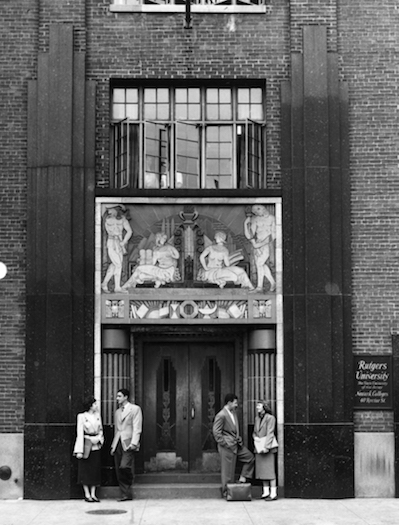

A 1950 photo of the downtown brewery that housed the University of Newark before and several years after it officially became part of Rutgers University in 1946. Image courtesy of Rutgers University—Newark Library.

Urban colleges struggled to overcome the handicap of their locations. Columbia University, for example, moved three times to escape the encroaching city. In 1897, the new campus at the then-largely-rural Morningside Heights area in northern Manhattan was bounded by walls, with many trees planted inside, to isolate students from the urban growth that would eventually surround the school. Campuses with large numbers of commuters initiated a range of programs to “Americanize” students and get them to move beyond the culture of their working-class and immigrant neighborhoods. However, universities in cities also took advantage of the opportunities the city offered for research, teaching, and collaborations with local museums and cultural institutions.

After World War II, federal and state governments increasingly saw college education as critical, a view reflected in the G.I. Bill and the massive expansion of state universities. College attendance in America grew dramatically, from 1.5 million in 1940 to 2.7 million in 1950, 3.6 million in 1960, and 7.9 million in 1970. By the 1960s, government officials and civil rights leaders also sought to expand access to higher education for low-income students in order to enable poor people to move into the middle class. Two-year community colleges opened across the country, largely in cities. City University of New York inaugurated “open enrollment,” guaranteeing that any high school graduate could attend a CUNY institution.

But even though the higher education landscape was changing dramatically, the term “urban university” still bore a stigma as low-status institutions that enrolled large numbers of local commuter students seen as socially unrefined and academically weak. As a result, “urban university” became a low-status label, which many universities in cities tried to avoid. In 1977, the Association of Urban Universities, which was founded in 1914, voted itself out of existence—reflecting the resistance of its members to its own name.

Then, in the last 25 years or so, higher education’s longstanding ambivalence about urban students and colleges evaporated. As many central cities have revitalized dramatically, growing numbers of upper-middle-class people have chosen to live there. In addition, cities appeal more and more to relatively affluent young people who grew up in homogeneous low-density suburbs. Cities are now “cool,” and the kind of worldly education they offer is in demand.

By 2012 an NYU admissions administrator told a Chronicle of Higher Education writer that, “whereas 20 years ago the city was our Achilles’ heel, it’s now our hallmark.” Freshmen applications to NYU grew from 10,862 in 1992 to 43,769 in 2012. Two years later, the Chronicle of Higher Education ran an article entitled “Urban Hot Spots Are the Place to Be,” arguing that “a college’s location might be more important than ever to its long-term prosperity as a residential campus”—because college students seek “hands-on experiences” which are most available in the “vibrant economy of cities.”

As students and schools have changed, the once controversial innovations pioneered by colleges in cities have prevailed. City institutions pioneered the democratization of undergraduate education, and universities across the country now strive to enroll large numbers of the kinds of “urban students,” including immigrants and minorities, once viewed with deep skepticism by many in the academy. It was urban colleges, particularly municipal institutions like City College and Hunter College, that began the once-controversial practice of providing college to commuters who could not afford to live away from home while in school.

Today, the overwhelming majority of college students commute. Urban colleges also initiated programs, controversial at the time, for adults and part-time students, including evening classes. Today, adult, part-time, and evening courses are nearly universal in state universities and widespread in private institutions. The broad access to college that was initiated by innovative city institutions is now central to the overall mission of American higher education.

From the founding of Harvard in 1636 onward, college leaders held a negative view of cities in general, and a deep-seated belief that cities were ill-suited to educating young men and women. In 1883, Charles F. Thwing, a minister with strong interest in higher education, wrote that a significant number of city-bred students “are immoral on their entering college” …

Urban colleges also changed curriculums and research agendas by developing a commitment to community-based research, taking advantage of the extensive resources of the city, and encouraging the study of local problems and policy issues. This kind of research is now widely practiced. The Engagement Scholarship Consortium, founded in 1999, encourages all universities to do research that is important to their communities. Its member institutions are located in cities, towns, and rural areas.

Relatedly, service learning and community engagement by college students has become a central focus of American higher education—vigorously championed by organizations like the National Society for Experiential Learning and federal government agencies like the Corporation for National and Community Service. This is another area pioneered by the so-called urban colleges.

Universities are also seen as key players in the economic development of their communities, a change that would not have occurred without the leadership of urban schools. In 1994, Harvard Business School professor Michael Porter founded the Initiative for a Competitive Inner City to “spark new thinking about the business potential of inner cities.” In 2001, CEOs for Cities and the Initiative for a Competitive Inner City released a study arguing that higher education institutions are well-positioned “to spur economic revitalization of our inner cities.”

The following year, Carnegie Mellon Professor Richard Florida published a book arguing that economic development depended on a “creative class” and that universities were “a key institution of the Creative Economy.” Florida re-envisioned the city as a fountain of economic growth and intellectual activity, placing the university—and its knowledge—at the center. Universities have become key entities for economic development in the post-industrial technology economy, not just in inner cities but also across the nation. Old prejudices about the urban university are effectively dead.

The purpose of colleges and universities—and undergraduate education itself—are still widely debated. Many people are deeply critical of American higher education. These conditions make it important to understand the history and value of college in the United States. Today, access to college makes it possible for millions of Americans to improve their socio-economic status and to live richer lives. Many do so while living at home, working, and attending part-time. Colleges teach traditional-aged students and adults of all ages, both full-time and part-time, including many minorities, immigrants, and people from low-income families, in degree and non-degree programs. Colleges play an ever greater role in our nation’s economy. And college students engage extensively in experiential learning, developing work skills and a commitment to civic responsibility.

All of these conditions began many years ago, in universities in cities. Whatever the deficiencies of American colleges, we must not forget how profoundly they serve society—and how those practices emerged initially in urban institutions.

Steven J. Diner is University Professor at Rutgers University-Newark, where he served as chancellor from 2002 to 2011. He is the author of Universities and Their Cities: Urban Higher Education in America.

Primary Editor: Lisa Margonelli. Secondary Editor: Siobhan Phillips.

Add a Comment